What is meant by setup and/or hold violations: The ultimate aim of timing analysis is to get the design work at required frequency and with reliability. For this to happen, it must be ensured in timing that all the state transitions are happening smoothly; i.e., the setup and hold requirements of all the timing paths in the design are met. If there are failing setup and/or hold paths, the design is said to have violations.

What if setup and/or hold violations occur in a design: As said earlier, setup and hold timings are to be met in order to ensure that data launched from one flop is captured properly at another and in accordance to the state machine designed. In other words, no timing violations means that the data launched by one flip-flop at one clock edge is getting captured by another flip-flop at the desired clock edge. If the setup check is violated, data will not be captured properly at the next clock edge. Similarly, if hold check is violated, data intended to get captured at the next edge will get captured at the same edge. Moreover, setup/hold violations can lead to data getting captured within the setup/hold window which can lead to metastability of the capturing flip-flop (as explained in our post metastability). So, it is very important to have setup and hold requirements met for all the registers in the design and there should not be any setup/hold violations.

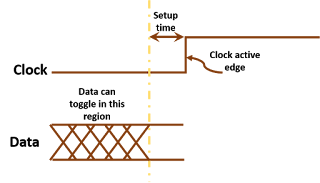

Setup violations: As we know, setup checks are applied for timing paths to get the state machine to move to the next state. The timing equation for a setup check from positive edge-triggered flip-flop to positive edge-triggered flip-flop is given as below:

Setup slack is the margin by which a timing path meets setup check requirement. It is given as the difference in R.H.S. and L.H.S. of setup timing equation. The equation for setup slack is given as:

If we analyze setup equation more closely, it involves four parameters:

Setup violations: As we know, setup checks are applied for timing paths to get the state machine to move to the next state. The timing equation for a setup check from positive edge-triggered flip-flop to positive edge-triggered flip-flop is given as below:

Tck->q + Tprop + Tsetup - Tskew < TperiodFor a timing path to meet setup requirements, this equation needs to be satisfied. The difference between left and right sides is represented by a parameter known as setup slack.

Setup slack is the margin by which a timing path meets setup check requirement. It is given as the difference in R.H.S. and L.H.S. of setup timing equation. The equation for setup slack is given as:

Setup slack = Tperiod - Tck->q - Tprop - TsetupIf setup slack is positive, it means the timing path meets setup requirement. On the other hand, a negative setup slack means setup violating timing path. If, by chance, a fabricated design is found to have a setup violation, you can still run the design at less frequency than specified and get the desired functionality as setup equation includes clock period as a variable.+ Tskew

If we analyze setup equation more closely, it involves four parameters:

- Data path delay: More the total delay of data path (flip-flop delay + combinational delay + Setup), less is setup slack

- Clock skew: More the clock skew (difference between arrival times of clock at capture and launch flip-flops), more is the setup slack

- Setup time requirement of capturing flip-flp: Less the setup time requirement, more will be setup slack

- Clock period: More is the clock period, more is the setup slack. However, if you are targetting a specific clock period, doing this is not an option. :-)

- Decreasing data path delay

- Choosing a flip-flop with less setup time requirement

- Increasing clock skew

How to fix setup violations discusses various ways to tackle setup violations.

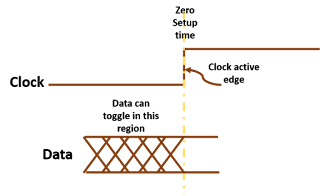

Hold violations: As we know, hold checks are applied to ensure that the state machine remains in its present state until desired. The hold timing equation for a timing path from a positive edge-triggered flip-flop to another positive edge-triggered flip-flop is governed by the following equation:

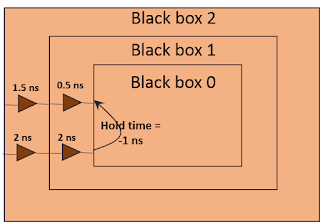

Tck->q + Tprop > Thold + TskewSimilar to setup slack, the presence and magnitude of hold violation is governed by a parameter called as hold slack. The hold slack is defined as the amount by which L.H.S is greater than R.H.S. In other words, it is the margin by which timing path meets the hold timing check. The equation for hold slack is given as:

Hold slack = Tck->q + Tprop - Thold + TskewIf hold slack is positive, it means there is still some margin available before it will start violating for hold. A negative hold slack means the path is violating hold timing check by the amount represented by hold slack. To get the path met, either data path delay should be increased, or clock skew/hold requirement of capturing flop should be decreased.

If we analyze hold timing equation more closely, it involves three parameters:

- Data path delay: More data path delay favours hold slack; hence, more data path delay, more is the margin

- Skew: Having a positive skew degrades hold slack

- Hold requirement of capturing flip-flop: Less the hold requirement, more will be hold slack

How to tackle hold violations: Similar to setup analysis, the ultimate aim of hold analysis is to get every timing path follow the hold timing equation and get a positive hold slack for each and every timing path in the design. If a timing path violates for hold, we can do either of the following:

- Increase data path delay

- Decrease clock skew

- Choose a flip-flop with less hold requirement