We have always heard that for asynchronous resets, only de-assertion needs to be timed. This is true for most of the designs as the guidelines followed for implementation of asynchronous resets. However, there may be a scenario wherein we may need to consider reset assertion as well for our timing checks. In this post, we will try to put some light on it.

* Reset assertion "combinationally" causes the output to go 0; i.e., assertion of reset does not wait for edge of the clock to alter the state of flip-flop

* Reset de-assertion waits for clock edge to propagate the value at "input" to "output". There are checks corresponding to de-assertion of reset with respect to clock known as "recovery" and "removal" checks.

Thus, we see that "recovery" and "removal" checks are defined only for de-assertion of asynchronous reset. So, one must think that there is no timing requirement for reset assertion. This is true in the sense that there is no requirement for reset assertion at the flip-flop that is receiving reset. And overall no timing requirement for a carefully implemented design. But some-times, there may be a corner-case scenario requiring the combinational path throuhg "reset -> output" to be timed. We will discuss some cases to elaborate our statement.

CASE 1: The output of flip-flop goes to another flip-flop, which itself is getting reset at the same time.

Here, since, the output in fanout is itself in reset state, it will not be sampling the output. Hence, there is no need to time the assertion of reset.

CASE 2: The output of flip-flop goes to an asynchronous domain or to a synchronizer which is not, itself, in reset. Here, the asynchronous domain flip-flop is expected to be a synchronizer in most of the cases. So, there does not arise a need for meeting timing.

CASE 3: The output of flip-flop goes to a flip-flop which is working on a synchronous clock and is expecting synchronous data. In this case, we will have to meet timing through the R -> Q of the source flip-flop and getting captured at the destination. We need to keep in mind that reset synchronizer also transfers through R -> Q for reset assertion. So, this case cannot be valid for global asynchronous reset assertion. For instance, let us consider below as a valid scenario.

For below case, we have to meet reset assertion timing from

ASYNC_RESET_SOURCE -> REG_B/R -> REG_B/Q -> REG_C/R -> REG_C/Q -> REG_D/D

Since, reset source is working on asynchronous clock, this is a design violation to get it captured on a flip-flop which is running functionally and expecting synchronous data.

Thus, there may not be any scenario to time asynchronous reset assertion globally. However, the state machines may locally utilize asynchronous reset assertion to get things done. For instance, you must have gone through a common problem known as "

conversion of asynchronous counter to decade counter", wherein asynchronous reset pin is utilized to reset the count whenever count reaches 10. We will simplify it as "conversion of 2-bit asynchronous counter to modulo-3 counter" to serve our purpose.

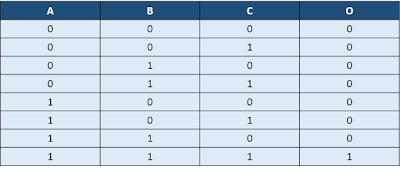

Consider following design of 2-bit asynchronous counter. We have shown two flip-flops to register the output of this counter for illustration purposes and to make the understanding more clear. Flip-flop "BIT_1" gets the output of "BIT_0" as clock. All other flip-flops get CLK as clock. Whenever output of both "BIT_1" and "BIT_0" goes 1, the reset of both flops will cause output to go "00" in the same cycle. This is expected to reach the registers by next clock cycle so that it can be captured properly. The intermediate state "11" is not captured at the next stage as understood by basics of state machines.

We get following timing equations to be met in a single cycle (minus setup time and skews etc.).

BIT_1/CK -> BIT_1/Q -> BIT_1/R -> BIT_1/Q -> REG_1/D

BIT_1/CK -> BIT_1/Q -> BIT_0/R -> BIT_0/Q -> REG_0/D

BIT_0/CK -> BIT_0/Q -> BIT_1/R -> BIT_1/Q -> REG_1/D

BIT_0/CK -> BIT_0/Q -> BIT_0/R -> BIT_0/Q -> REG_0/D

Thus, this is an example of a scenario wherein we need timing for assertion of reset as well. It is captured at the next flip-flops running combinationally through the flip-flops' "Q" pin. Can you deduce the timing paths to be timed for the original case of "asynchronous counter as a decade counter" as well.

Another possible care-about of asynchronous reset assertion may be degradation of duty cycle, if the output is used as a clock such as highlighted path, where Q0 is being consumed as clock for "BIT_1" flip-flop. This can cause the minimum pulse width requirement to be violated for "BIT_1" flip-flop. This perspective of reset assertion is discussed

here.

Also read: