Hi friends, in the post State machines – a practical perspective, we learnt about state machines. We also discussed different aspects of a state machine with the help of an example and the need of setup and hold checks to be taken care of. In this post, we will be discussing the state machine with a pinch of setup and hold and try to build a better understanding regarding these. For recapitalization, see figure 1. Each clock edge in a digital state machine represents a state. At each clock edge, all the registers in the design update their value based upon the data available at their input which is based upon the value computed on the basis of values launched by some other registers at previous clock edge. In simplest of words, state 3 is dependent upon state 2, which, in turn, is dependent upon state 1 and so on.

|

Figure 1: State machine representation of a clock pulse

|

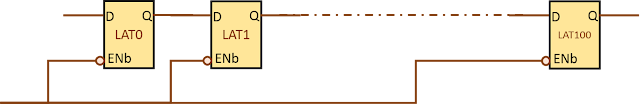

All digital systems are synchronous systems (state machines) that require all the elements of the state machine to be in harmony. For instance, let us say, a state machine has 100 registers. All these registers need to be updated at the same time and need synchronized inputs so as to have only valid states in the state machines. For digital synchronous state machines, all the registers are synchronized by a clock signal. This is ensured with the help of setup and hold checks. But why do we need to apply setup and hold checks, is the question still unanswered.

Why setup and hold? We often encounter people saying that meeting the setup and hold requirements of a design is critical for silicon functionality. But have you ever thought why it is so? As we now know from our previous post, for a design consisting of only positive edge-triggered registers, each positive clock edge corresponds to a state and the state of the machine is updated at every clock edge since all the flip-flops capture data. In other words, the state of the machine is a function of the values of the registers at a particular clock cycle. For proper state machine functioning, the values launched from one register at one clock edge should be available at the input of the capturing flop before next clock edge arrives and should be available only after the present clock edge has passed (will become clear later on). This is necessary in order that the next state of the state machine is a valid state. If this does not happen, the state of the machine will not be what is desired. It may also happen that the state machine goes altogether into an invalid state leading to undesired results. And this is the reason setup is checked on the next edge and hold on same edge as discussed below.

Let us assume a hypothetical state machine consisting of three registers and some logic gates as shown in figure 2 below. If we assume the initial outputs of REG1 and REG2 to be 1 and 0 respectively, then the possible states can only be 100 or 010.

|

Figure 2: A state machine with three registers and some logic

|

The state transition table for this state machine is as shown in figure 2. As is shown, there are only two valid states (100 and 010) provided the initial state of REG1 and REG2 is different (10 or 01). The state of REG3 is supposed to remain always ‘0’ as the REG1 and REG2 are supposed to always have different values at a time (given initial condition is also this).

|

Figure 3: State diagram of state machine shown in figure 2

|

The states with respect to clock waveform are as shown in figure 4 below. As is expected, each clock edge corresponds to one of the two states.

|

Figure 4: Clock waveforms showing different states of state machine

|

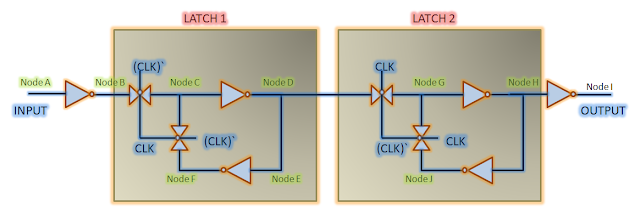

Now, the state of register 2 at a particular state (clock edge) depends upon state of register 1 at the previous clock edge. Let us assume register 2 is getting delayed clock with respect to register 1. In other words, there is considerable positive skew between the two registers. In this case, as shown in figure 5 below, there is a good chance that the data launched from register 1 is captured at register 2 at the same edge and not the next clock edge. Due to this, both register 1 and 2 will have same value at a particular clock cycle and the machine will run into invalid state. The data getting captured at the same edge as the launch flop is termed as hold violation (unless it is architecturally intended, the discussion of this is outside the scope of this topic).

|

Figure 5: Hold violation resulting in state machine going to invalid state

|

Similarly, for the proper functioning of the state machine, the data launched at one edge should get captured at the next edge. This is what is termed as setup check. Thus, setup check is formed on next edge only. The failure in happening so is termed as a setup violation. Similarly, the data launched at one edge should not be captured on the same edge. Thus, hold check represents this situation and ensures that the data launched on one edge is not captured on the same edge.

Based upon development of our understanding in this post, setup and hold can be defined as:

Setup check: Setup check refers to the condition in which data launched at one clock edge should get captured at the next clock edge so that the state machine functionality is preserved and the state machine transitions smoothly from one state to the next. The failure in happening so is termed as setup violation and the state machine might transition to an invalid state.

Hold check: Hold check refers to the condition in which data launched at one clock edge should not get captured at the same clock edge so that present state of the state machine does not get corrupt. The failure in happening so is termed as hold violation and the state machine might transition to an invalid state.