Definition of multicycle paths: By definition, a

multi-cycle path is one in which data launched from one flop is allowed (through architecture definition) to take more than

one clock cycle to reach to the destination flop. And it is architecturally

ensured either by gating the data or clock from reaching the destination flops.

There can be many such scenarios inside a System on Chip where we can apply multi-cycle paths as discussed later. In this post, we discuss architectural aspects of multicycle paths. For timing aspects like application, analysis etc, please refer

Multicycle paths handling in STA.

Why multi-cycle paths are introduced in

designs: A typical System on Chip consists of many components working

in tandem. Each of these works on different frequencies depending upon

performance and other requirements. Ideally, the designer would want the

maximum throughput possible from each component in design with paying proper

respect to power, timing and area constraints. The designer may think to

introduce multi-cycle paths in the design in one of the following scenarios:

1)

Very

large data-path limiting the frequency of entire component: Let us take a

hypothetical case in which one of the components is to be designed to work at

500 MHz; however, one of the data-paths is too large to work at this frequency.

Let us say, minimum the data-path under consideration can take is 3 ns. Thus, if

we assume all the paths as single cycle, the component cannot work at more than

333 MHz; however, if we ignore this path, the rest of the design can attain 500

MHz without much difficulty. Thus, we can sacrifice this path only so that the

rest of the component will work at 500 MHz. In that case, we can make that

particular path as a multi-cycle path so that it will work at 250 MHz

sacrificing the performance for that one path only.

2)

Paths

starting from slow clock and ending at fast clock: For simplicity, let us suppose there is

a data-path involving one start-point and one end point with the start-point

receiving clock that is half in frequency to that of the end point. Now, the

start-point can only send the data at half the rate than the end point can

receive. Therefore, there is no gain in running the end-point at double the

clock frequency. Also, since, the data is launched once only two cycles, we can

modify the architecture such that the data is received after a gap of one

cycle. In other words, instead of single cycle data-path, we can afford a two

cycle data-path in such a case. This will actually save power as the data-path

now has two cycles to traverse to the endpoint. So, less drive strength cells

with less area and power can be used. Also, if the multi-cycle has been

implemented through clock enable (discussed later), clock power will also be saved.

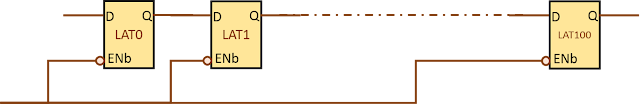

Implementation of multi-cycle paths in

architecture: Let us discuss some of the ways of introducing

multi-cycle paths in the design:

1)

Through

gating in data-path: Refer to figure 1 below, wherein ‘Enable’ signal gates

the data-path towards the capturing flip-flop. Now, by controlling the waveform

at enable signal, we can make the signal multi-cycle. As is shown in the

waveform, if the enable signal toggles once every three cycles, the data at the

end-point toggles after three cycles. Hence, the data launched at edge ‘1’ can

arrive at capturing flop only at edge ‘4’. Thus, we can have a multi-cycle of 3

in this case getting a total of 3 cycles for data to traverse to capture flop.

Thus, in this case, the setup check is of 3 cycles and hold check is 0 cycle.

|

| Figure 1: Introducing multicycle paths in design by gating data path |

Now let us

extend this discussion to the case wherein the launch clock is half in

frequency to the capture clock. Let us say, Enable changes once every two

cycles. Here, the intention is to make the data-path a multi-cycle of 2

relative to faster clock (capture clock here). As is evident from the figure

below, it is important to have Enable signal take proper waveform as on the

waveform on right hand side of figure 2. In this case, the setup check will be

two cycles of capture clock and hold check will be 0 cycle.

|

Figure 2: Introducing multi-cycle path where

launch clock is half in frequency to

capture clock

|

2) Through gating in clock path: Similarly, we can make the capturing flop capture

data once every few cycles by clipping the clock. In other words, send only

those pulses of clock to the capturing flip-flop at which you want the data to

be captured. This can be done similar to data-path masking as discussed in

point 1 with the only difference being that the enable will be masking the

clock signal going to the capturing flop. This kind of gating is more

advantageous in terms of power saving. Since, the capturing flip-flop does not

get clock signal, so we save some power too.

|

| Figure 3: Introducing multi cycle paths through gating the clock path |

Figure 3 above shows how multicycle paths can be achieved with the help of clock gating. The enable signal, in this case, launches from negative edge-triggered register due to architectural reasons (read here). With the enable waveform as shown in figure 3, flop will get clock pulse once in every four cycles. Thus, we can have a multicycle path of 4 cycles from launch to capture. The setup check and hold check, in this case, is also shown in figure 3. The setup check will be a 4 cycle check, whereas hold check will be a zero cycle check.

Pipelining v/s introducing multi-cycle

paths: Making a long data-path to get to destination in two cycles can

alternatively be implemented through pipelining the logic. This is much simpler

approach in most of the cases than making the path multi-cycle. Pipelining

means splitting the data-path into two halves and putting a flop between them,

essentially making the data-path two cycles. This approach also eases the

timing at the cost of performance of the data-path. However, looking at the

whole component level, we can afford to run the whole component at higher

frequency. But in some situations, it is not economical to insert pipelined

flops as there may not be suitable points available. In such a scenario, we

have to go with the approach of making the path multi-cycle.

References: