How to interpret default setup and hold checks

Default Setup/hold checks - positive flop to negative flop timing paths

The launch/capture event of a positive edge-triggered flip-flop happens on every positive edge of the clock, whereas that of a negative edge-triggered flip-flop occurs on the negative edge of the flip-flop. In this post, we will discuss the default setup/hold checks different cases - same clock, 1:n clock ratio clock and n:1 ratio clock. And this should cover all the possible cases of setup/hold checks.

Case 1: Both flip-flops getting same clock

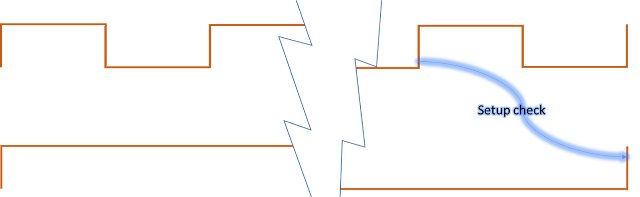

Figure 1 shows a timing path from a positive edge-triggered flip-flop to a negative edge-triggered flip-flop. Let us say the data is launched at instant of time "T", which is a positive edge. Then, the next negative edge following time "T" serves as the edge which captures this data; thus forming the default setup check. And the very previous negative edge serves as the hold check. This is shown in the first part of figure 1. Thus, in this case, both setup and hold checks are half cycle.

Setup and hold slack equations

Setup slack = Period(clk)/2 + Tskew - Tclk_q - Tcomb - Tsetup

Hold slack = Period(clk)/2 + Tclk_q + Tcomb - Tskew - Thold

Case 2: Flip-flops getting clocks with frequency ratio N:1 and positive edge of launch clock coincides with negative edge of capture clock

One of the cases where this happens is when clock is divided by an even number. Another is when odd division is followed by inversion. The resulting waveform will be as shown in figure 2. In this case, each positive edge of launch flip-flop is capable of launching a fresh data, but will be overwritten by next data. Only the one which is launched on the positive edge closest to the negative edge of capture clock will get captured at the endpoint. Similarly, the data which is launched at the edge coinciding negative edge of capture clock must not overwrite the data captured at the same edge. The setup and hold checks, thus formed, are as shown in figure 2 below. The setup check is full cycle of launch clock, whereas hold check is a zero cycle check.

Setup and hold slack equations

Setup slack = period(launch_clock) + Tskew - Tclk_q - Tcomb - Tsetup

Hold slack = Tclk_q + Tcomb - Tskew - Thold

Case 3: Flip-flops gettings clocks with frequency ration N:1 and positive edge of launch clock coincides with positive edge of capture clock

One of the cases where this happens is when capture of the data happens on an odd divided clock. The resulting setup and hold checks are as shown in figure 3. Both setup and hold checks are half cycle of faster launch clock.

Setup and hold slack equations

Setup slack = period(launch_clock)/2 + Tskew - Tclk_q - Tcomb - Tsetup

Hold slack = period(launch_clock)/2 + Tclk_q + Tcomb - Tskew - Thold

Case 4: Flip-flops getting clocks with frequency 1:N and positive edge of launch clock coincides with negative edge of capture clock

One of the cases is when division is performed after inversion of the master clock and data is launched on the divided clock. Figure 4 shows the default setup/hold checks for this case. In this case, setup check is equal to full cycle of faster clock and hold check is a zero cycle check.

Setup and hold slack equations

Setup slack = period(capture_clock) + Tskew - Tclk_q - Tcomb - Tsetup

Hold slack = Tclk_q + Tcomb - Tskew - Thold

Case 5: Flip-flops getting clocks with frequency 1:N and positve edge of launch clock coincides with positive edge of capture clock

This is a case of even division, or inversion, followed by odd division, followed by inversion. The setup and hold checks, both are equal to half cycle of faster clock.

Setup and hold slack equations

Setup slack = period(capture_clock)/2 + Tskew - Tclk_q - Tcomb - Tsetup

Hold slack = period(capture_clock)/2 + Tclk_q + Tcomb - Tskew - Thold

Can you think of any other scenario of setup/hold checks for this case? Please feel free to share your views.

Clock relationship between reset synchronizer and fanout flip-flops

Design problem: Reset synchronizer clock for multi-frequency flip-flops in fanout

|

| Figure 1: Reset synchronizer |

|

| Figure 2: Reset synchronizer works on positive edge of 100 MHz clock |

|

| Figure 3: Reset synchronizer works on positive edge of 200 MHz clock coinciding with positive edge of 100 MHz clock |

|

| Figure 4: Reset synchronizer works on positive edge of 200 MHz clock coinciding with negative edge of 100 MHz clock |

Now, let us explore the last option; i.e., reset synchronizer working on negative edge of 100 MHz clock. In this case, as shown in figure 5, both 100 MHz and 200 MHz flip-flops come out of reset on same edge. Thus, this case works perfectly. Figure 5 illustrates this.

|

| Figure 5: Reset synchronizer works on negative edge of 100 MHz clock |

Can you provide any other solution that is possible and better than ones discussed here.

Data check timing paths

Constraining data-check timing paths: To constrain data-check timing paths, we first need to ensure that there is a data-check associated with the signals in question. It can either be defined in the timing model being picked or we can define using SDC construct "set_data_check". Once data check is defined, we can simply ensure that both the reference signal and constrained signal are launched from same clock or related clock to see data-check timing path reported.

Clock gating timing paths

- The endpoint is the "EN" pin of Integrated Clock Gating (ICG) cell OR

- The endpoint is one of the input pins of a combinational cells with at least one of the other pins getting a clock signal

Why is the sum of setup time and hold time always positive

Reg-to-out paths

Constraining with virtual clock is helpful when we know that the data-path budgeting is exclusive of clock path, for instance, a sub-design of an SoC. A virtual clock is a clock without any source. So, data-path outside the block can be modeled using "set_output_delay" with respect to virtual clock and clock path outside the block can be modeled using "set_clock_latency" for virtual clock. The steps are listed below:

- "create_clock" at clock source : CLK

- "create_clock" without a clock source : VCLK (virtual clock)

- set_output_delay at output port with respect to VCLK

When we know that the outside data path delay and clock path delay are fixed, then we can constrain the port with respect to real clock itself. The steps are listed below:

- "create_clock" at clock source : CLK

- "set_output_delay" at output_port with respect to CLK (or with respect to a clock related to CLK)

In-to-reg paths

Constraining with virtual clock is helpful when we know that the data-path budgeting is exclusive of clock path, for instance, a sub-design of an SoC. A virtual clock is a clock without any source. So, data-path outside the block can be modelled with "set_input_delay" with respect to virtual clock and clock path outside the block can be modeled using "set_clock_latency" for virtual clock. The steps are listed below:

- "create_clock" at clock source

- "create_clock" without any source (virtual clock)

- set_input_delay at input_port with respect to virtual clock created

When we know that the outside data path delay and clock path delay are fixed, then we constrain the input port with respect to real clock itself. An example is SoC level protocol signals such as ethernet signals. The input port is constrained either with respect to the same clock going to the endpoint or some clock related to it. The steps are listed below:

- "create_clock" CLK at clock source

- "set_input_delay" at input_port with respect to CLK (or with respect to a generated_clock created from the CLK

Reg-to-reg paths

- Flop-to-flop paths: Both startpoint and enpoint are edge-triggered (flops). See Setup and hold checks for flop-to-flop paths

- Flop-to-latch paths: Startpoint is edge-triggered and endpoint is level-sensitive. See setup and hold checks for flop-to-latch paths

- Latch-to-flop paths: Startpoint is level-sensitive and endpoint is edge-triggered. See setup and hold checks for latch-to-flop paths

- Latch-to-latch paths: Both startpoint and endpoint are level-sensitive. See setup and hold checks for latch-to-latch paths

- All the components of a timing path we discussed in timing paths, i.e. startpoint, endpoint, launch clock path, capture clock path and data path exist for a reg-to-reg path.

- To constrain reg-to-reg paths, we just have to ensure that both the startpoint and endpoint receive a valid clock signal and there is no timing exception (such as false path between the clocks) masking the timing path.

Timing path types

|

| Figure 1: Generic timing path |

In-to-reg path: The timing path where "startpoint" is an input port and "endpoint" is a sequential element, is termed as in-to-reg path.

Reg-to-out path: Here, "startpoint" is a sequential element and "endpoint" is an output port.

In-to-out path: In this type of path, "startpoint" is an input port and "endpoint" is and output port.

Clock gating paths: In this type of path, "startpoint" can be any out of sequential element, input port or output port. The endpoint is usually input pin of either a combinational gate or an Integrated Clock Gating cell (ICG). The common scenario involved is to time the arrival of constrained signal (termed as enable in clock gating paths) such that complete pulses of clock as reference signal are transmitted and there is no glitch at the output of the "endpoint".

Min-pulse-width-check paths: Here, both reference and constrained path, both are clock paths and common right from source till "endpoint". This type of path compares the latest arrival of rise transition of the clock with respect to the earliest arrival of fall transition of clock and vice-versa. The nature of check is max check only.

Data check paths: In this type of paths, both reference signal and constrained signal are data launched by a clock signal.

Point-to-point paths: The paths with only constrained signal are called as point-to-point paths. "startpoint" as well "endpoint" can be any sequential or combinational pin or port.

Timing paths

A timing path can be supposed to be consisting of two sub-paths - a reference path through which reference signal traverses and a constrained path through which constrained signal traverses. Both of these essentially originate from same source (or have a definite relationship at their respective sources). At the terminal end of both, there is a relationship governing the arrival of constrained signal to the arrival of reference signal. Depending upon the type of reference signal and constrained signal, the type of elements encountered by these and the check that is formed between the two, we govern the type of path. For instance, in a reg-to-reg setup path, the reference signal is clock, constrained signal is data launched from a clock and traversing through a flip-flop and the check that is formed between the two signals is a setup check at a flip-flop as the endpoint.

|

| Figure 1: Generic timing path in STA |

Based upon type of check being formed between constrained signal and reference signal, there are commonly two types of paths that are formed: max path/setup check path and hold check path/min path.

Max/setup check path: In this kind of path, the earliest arrival of reference signal and latest arrival of constrained signal is considered. The kind of check is known as setup check in most of the cases. And the type of path is called setup path/max path.

Min/hold check path: In this kind of paths, the earliest arrival of constrained signal and the latest arrival of reference signal is considered. The kind of check is known as hold check in most of the cases. And the type of path is called hold path/min path.

Let us move to the commonly perceived understanding of a timing path by taking an example of a reg-to-reg path. Figure 1 below shows an example of a timing path, which starts from a flip-flop and ends at a flip-flop.

|

| Figure 2: Components of a reg-to-reg path |

The above timing path (or any timing path, in general), has following components:

Startpoint: The element from which the data gets launched is known as startpoint. In general, it can be a sequential element (latch, flip-flop) or an input port. In case it is a flip-flop, the clock pin of the flip-flop is counted as the startpoint of timing path. For point-to-point paths, it can also be a combinational input or output pin.

Endpoint: The element at which timing path ends is called the endpoint. It can be data pin of flip-flop or an output port. For point-to-point paths, it can also be a combinational input or output pin.

Clock: Most of the timing paths are constrained by a clock signal, which clocks both startpoint and endpoint. The properties of the clock signal, such as clock period, jitter etc are defined in timing constraints.

Launch clock path: It refers to the path traversed by clock signal from clock source to the startpoint.

Capture clock path: It refers to the path traversed by clock signal from clock source to the endpoint.

Data path: It refers to the path traversed by data signal from starptoint to endpoint.

In the above example, launch clock path and data path together constitute constrained signal path and capture clock path constitutes reference signal path.

Timing requirements/constraints related to a reset synchronizer

|

| Figure 1: Reset synchronizer |

- R0/D (Data input pin) is tied to 1, hence, no timing requirement related to this.

- Reset deassertion timing is required for R0/Q -> R1/D at the clock frequency at which reset deassertion is happening

- Similarly, reset deassertion timing is required for R1/Q -> functional_flops/Rbar pins

- Timing at R0/Rbar pin is not required, since, it is put there to absorb metastability and come out of metastability before next clock edge

- Timing at R1/Rbar pin is not required, since, when Rbar gets deasserted, R1/D and R1/Q are both at value "0".

- Both R0 & R1 need at least a certain pulse width at Rbar pin in order to detect the reset. This requirement is generally given in the timing model of flip-flop

- Reset synchronizer must be clocked on either same or related clock to its fanout flops

- set_false_path -to R0/Rbar

- set_false_path -to R1/Rbar

- Min-pulse-width requirement at Rbar pins modelled in timing models

Min-pulse-width-check timing paths

- Min-pulse-width requirement for the pin is picked from timing model

- User specifically specifies a "min-pulse-width" check at a specific pin using "set_min_pulse_width" command. This may be required in certain scenarios, such as a clock going out of the design through an output port, and we need to maintain a minimum duty cycle for the outgoing clock.

In-to-out paths

Constraining with respect to a virtual clock: We can consider in-to-out path as a sub-segment of a larger reg-to-reg path. And we can constrain these paths using a virtual clock. Using "set_input_delay" for input port and "set_output_delay" for output port with respect to same virtual clock, these paths can be constrained.

- Create a clock VCLK without any source

- "set_input_delay" at input_port with respect to VCLK

- "set_output_delay" at output_port with respct to VCLK

Constraining as point-to-point paths: We can constrain in-to-out paths using "set_max_delay" command as point-to-point paths. However, using this approach, we may need to apply some extra constraints as well depending upon the behavior of the tool we are using.

Where does STA fit in backend design flow

|

| Figure 1: STA as an integral part of physical design cycle |

Static Timing Analysis

|

| Figure 1: A sample signal propagation between two sequential elements |

How clock gating reduces power dissipation

|

| Figure 2: Flip-flop internal structure |

Design query : How can we construct a 101 non overlapping counter using only combinational circuit for 32 bit input for example on considering 10101001 i want an output as 1 since there is only 1 101 non overlapping sequence

|

| Figure 1: Design representation |

|

| Figure 2: Block diagram representation of design |

On = FED'C + G'F'ED'C

On = ED'C (F+G')

For bit 31, the upper two bits do not exist. 101XX is the expression for getting output as 1. Thus, the equation, on a similar note, can be expressed as:

O31 = ED'C

For bit 30, the expression comes out to be X101XX. So, O30 also has same logic as O31.

|

| Figure 3: Circuit with logic for intermediate output |

This post is in response to a query posted on our "post your query" page. In case you want to have an answer to your query, you can post a comment. We will try our best to answer.

Design query :: Combinationally count number of 1's in a 32-bit bus

Let us first create a truth-table converting the number of 1's in a 4-bit stream into a 2-bit number. The resulting truth table is shown in figure 1.

|

| Figure 1: Truth table for 4-bit count 1's circuit |

Solving the above for O2, O1 and O0 using K-maps, we get the expressions as shown in figures 2, 3 and 4 below.

|

| Figure 2: Expression for O2 |

|

| Figure 3: Expression for O1 |

|

| Figure 4: Expression for O0 |

|

| Figure 5: Complete block diagram of counting number of 1's |