A time to

digital converter is a circuit that digitizes time; i.e., it converts time into

digital number. In other words, a time-to-digital converter measures the time

interval between two events and represents that interval in the form of a

digital number.

TDCs are used in places where the

time interval between two events needs to be determined. These two events may,

for example, be represented by rising edges of two signals. Some applications

of TDCs include time-of-flight measurement circuits and All-Digital PLLs.

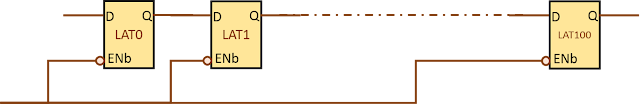

Delay line based time-to-digital

converter: This is a very primitive TDC and involves a delay-line which

is used to delay the reference signal. The other signal is used to sample the

state of delay chain. Each stage of delay chain outputs to a flip-flop or a

latch which is clocked by the sample signal. Thus, the output of the TDC forms

a thermometer code as the stage will show a ‘1’ if the reference signal has

passed it, otherwise it will show a zero. The schematic diagram of delay line

based time-to-digital converter is shown in figure 1 below:

|

| Figure 1: Delay line based Time-to-digital converter |

The VHDL code for delay line

based time-to-digital converter is given below:

-- This is the module

definition of delay line based time to digital converter.

library ieee;

use ieee.std_logic_1164.all;

entity tdc is

generic (

number_of_bits : integer := 64

);

port (

retimed_clk : in std_logic;

variable_clk : in std_logic;

tdc_out : out std_logic_vector

(number_of_bits-1 downto 0);

reset : in std_logic

);

end entity;

architecture behavior of tdc

is

component buffd4 is port (

I : in std_logic;

Z : out std_logic

);

end component;

signal buf_inst_out : std_logic_vector

(number_of_bits downto 0);

begin

--buffd4

buf_inst_out(0) <= variable_clk;

tdc_loop : for i in 1 to (number_of_bits) generate

begin

buf_inst : buffd4 port map (

I =>

buf_inst_out(i-1),

Z =>

buf_inst_out(i)

);

end generate;

process (reset,retimed_clk)

begin

if reset = '1' then

tdc_out <= (others

=> '0');

elsif retimed_clk'event and

retimed_clk = '1' then

tdc_out <=

buf_inst_out(number_of_bits downto 1);

end if;

end process;

end architecture;

References:

Hope you’ve found this post useful. Let us know what you think in the comments.