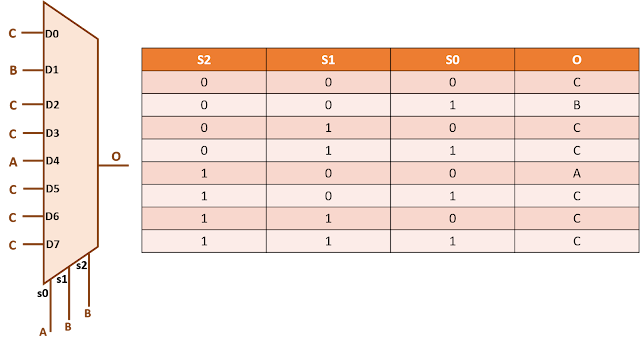

Problem statement: An 8:1 multiplexer selects one out of three inputs based upon different combinations of S2, S1 and S0 as shown in figure below. Minimize the logic with a view that B is the most timing critical input.

Solution:

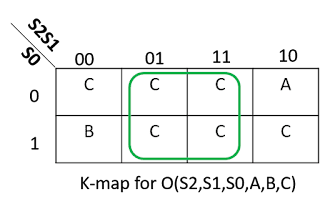

A 4-input MUX has one 4-input AND and one 8-input OR between each input and the output. However, since, there is one signal connected to many of the inputs, there seems to be a scope of logic minimization. Let us use K-map to minimize the logic for problem. The K-map for this problem is as shown below:

Writing the boolean expression, we get:O = S2'S1'S0' C + S2'S1'S0 B + S1 C + S2S1'S0'A + S2S1'S0 C

O = C (S1 + S1' (S2 ⊕ S0)) + S2'S1'S0 B + S2 S1' S0' A

O = C (S1 + ( S2 ⊕ S0 )) + S2'S1'S0 B + S2 S1' S0' A [Using A + A'B = A + B]If we analyze carefully, we see that O is obtained by OR-ing three terms; one for A, one for B and one for C. The resulting structure is shown below:

Since, B is the most timing-critical input; there should be minimum logic between B and output. In other words, it should be closest to output. In the above figure, we see that there is a 4-input AND gate and a 3-input OR gate between O and B. We can reduce the logic between B and O by breaking 3-input OR into 2-input OR gates such that B is closest to output. similarly, we can break the 4-input AND gate into 2-input and 3-input AND gates. Thus, we are left with one 2-input AND gate and one 2-input OR gate between B and O as shown in figure below.

We see that there is still a possibility of logic re-structuring between B and O. De-Morgans theorem states that

A + B = (A' B')'Going by this, we can convert the OR gate at the output into NAND gate as shown in figure below.

The bubbles at the input of NAND gate can be moved to the outputs of respective drivers. Or, saying, more sophistically, there are two NAND gates between B and O.

Thus, we have achieved our purpose of

- Minimizing the logic

- Assuring that there is minimum logic between B and O, since B is the most timing critical input.

We need to keep in mind that there may be more than one solutions to each logic minimization problems. Can you think of a better realization of the circuit in question? What could have been the realization of the circuit in case C was the most timing critical input?