The dynamic power associated with any circuit is related to the amount of switching activity and the total capacitive load. In digital VLSI designs, the most frequently switching element are clock elements (buffers and other gates used to transport clock signal to all the synchronous elements in the design). In some of the designs, clock switching power may be contributing as high as 50% of the total power. Power being a very critical aspect, we need to make efforts to reduce this. Any effort that can be made to save the clock elements toggling can help in reducing the total power by a significant amount. Clock gating is one of the techniques used to save the dynamic power of clock elements in the design.

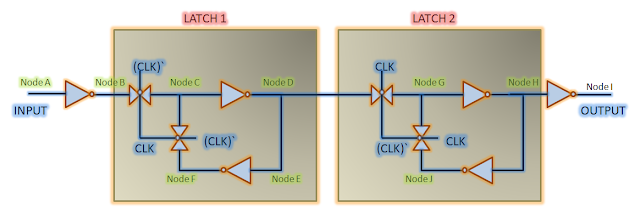

Principle behind clock gating: The principle behind clock gating is to stop the clock of those sequential elements whose data is not toggling. RTL level code talks only about data transfer. It may have some condition wherein a flip-flop will not toggle its output if that condition is met. Figure 1 below shows such a condition. In it, FF1's output will remain stable as long as EN = 0. On the right hand side, its equivalent circuit is provided, wherein EN has been translated into an AND gate in the clock path.This is a very simplistic version of what modern-day synthesis tools do to implement clock gating.

|

| Figure 1: Clock gating implementation |

Implications of clock gating: The implementation of clock gating, as expected, is not so simple. There are multiple things to be taken into account, some of which are:

- Timing of enable (EN) signal: The gating of clock can cause a glitch in clock, if not taken care of by architectural implementation. Clock gating checks discusses what all needs to be taken care of as regards timing in clock gating implementation.

Area/power/latency trade-off: As is shown in figure 1, clock gating transfers a data-path logic into clock path. This can increase overall clock latency. Also, area penalty can be there, if the area of clock gating structure is more. Power can also increase, instead of decreasing, if only 1-2 flops' structure is replaced by clock gating (depending upon the switching power of clock gating structure vs those inside flip-flop). Normally, a bunch of flops with similar EN condition are chosen, and a common clock gating is inserted for those, thereby minimizing area and power penalties.

Also read: